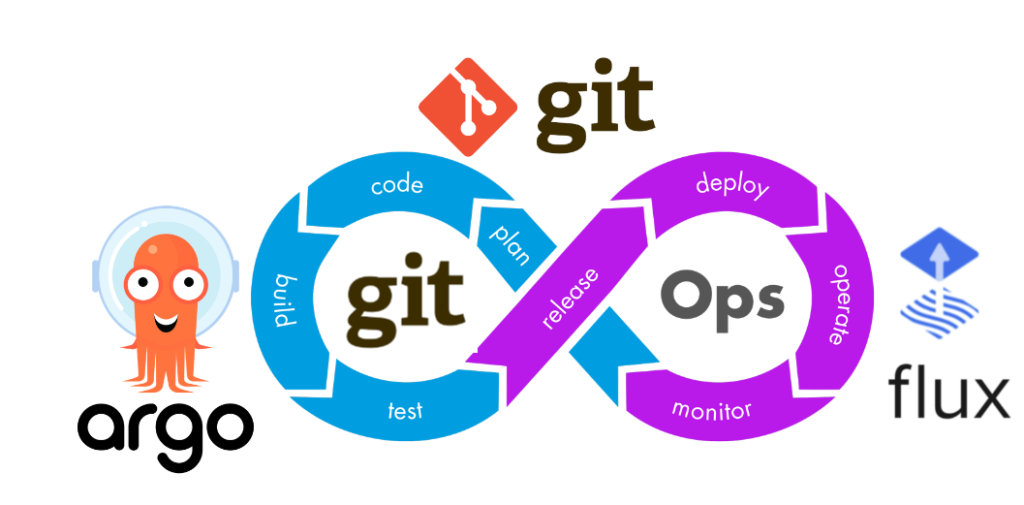

ArgoCD is a tool which provides GitOps way of deployment. It supports various format of applications including Helm charts.

Helm is one of the most popular packaging format for Kubernetes applications. It give a rise to various tools to manage helm chart like helmfiles, helmify and others.

Why ArgoCD is replacing Helm managers?

For a long time Helm managers help to deploy Helm charts via different deploy strategies. It serves well, but the big disadvantage was a code drift which accumulate over time.

To avoid code drift one solution was to schedule a period job which will apply latest changes to Helm charts. Sometimes it works, but most often if one of the charts get install issue all other charts stop being updated as well.

So, it wasn’t ideal way to handle it.

In that situation ArgoCD comes to a rescue. ArgoCD allow to control the update of each chart as a dedicated process where issue in one chart will not block updates on other charts. Helm charts in ArgoCD is a first class citizen and support most of it features.

How it looks like in ArgoCD?

To try it out lets start with a simple Application which will install Prometheus helm chart with a custom configuration.

Prometheus helm chart can be found at https://prometheus-community.github.io/helm-charts

Custom configuration will be stored in our internal project https://git.example.com/org/value-files.git

Thanks to multi source support in Application we can use official chart and custom configuration together like in the following example:

apiVersion: argoproj.io/v1alpha1

kind: Application

spec:

sources:

- repoURL: 'https://prometheus-community.github.io/helm-charts'

chart: prometheus

targetRevision: 15.7.1

helm:

valueFiles:

- $values/charts/prometheus/values.yaml

- repoURL: 'https://git.example.com/org/value-files.git'

targetRevision: dev

ref: valuesWhere `$values is a reference to root folder of values-files repository. That values file override default prometheus settings, so we can configure it for our needs.

What’s next?

There are several distinct feature which Helm managers support, so your next step is to check if ArgoCD cover all your needs.

One of the notable feature is secrets support. Many managers support SOPS format of secrets where ArgoCD don’t give you any solution, so it’s up to you how to manage secrets.

Other important feature is order of execution which can be important part of your Helm manager setup. ArgoCD don’t have built-in replacement, so you have to rely on Helm format to support dependencies. One way is to build umbrella charts for the complex applications.

Most important change is the GitOps style of deployment. ArgoCD doesn’t run CI/CD pipeline, so you don’t have a feature like helm diff to preview changes before applying. It will apply them as soon as they become available in the linked git repository.

Conclusions

- ArgoCD can replace Helm managers, but it strongly depend on your project needs.

- ArgoCD introduce new challenges like secrets managers and order of execution.

- It introduce GitOps deployment style and replace usual CI/CD pipeline, so new “quality gates” needs to be build to be ready for production environment.