Recently I had a pleasure to test self-hosted AI model with RAG support for code suggestion task. Results as most predicted: the cost/performance ratio for small teams are still in favors of proprietary solutions.

Here’s a breakdown of the hardware, quality, and cost realities for teams considering open-source Large Language Models (LLMs) with Retrieval Augmented Generation (RAG) support.

💸 The High Cost of the “Free” Model

While the models themselves (like Qwen or Mistral) are open-source, the Total Cost of Ownership (TCO) for the required infrastructure quickly escalates, making small, self-hosted LLMs surprisingly inefficient.

1. The Entry-Level Trap (1.5B Parameter Model)

- Model Tested: Qwen2.5-Coder-1.5B-Instruct.

- Hardware Required: A GPU with at least 4 GB of VRAM, typically running on an entry-level cloud GPU instance like an NVIDIA T4 (15 GB) on GCP.

- Estimated Monthly Cost: Running a T4 instance 24/7 (which is necessary to prevent long cold-start times) easily starts at $340 per month (based on standard cloud GPU rates and VM costs).

- Conclusion: Running this 1.5B model is often the minimum price for maximum disappointment. The model’s limited reasoning capacity means the quality of code suggestions is very poor. RAG provides the context, but the small model struggles to synthesize it effectively.

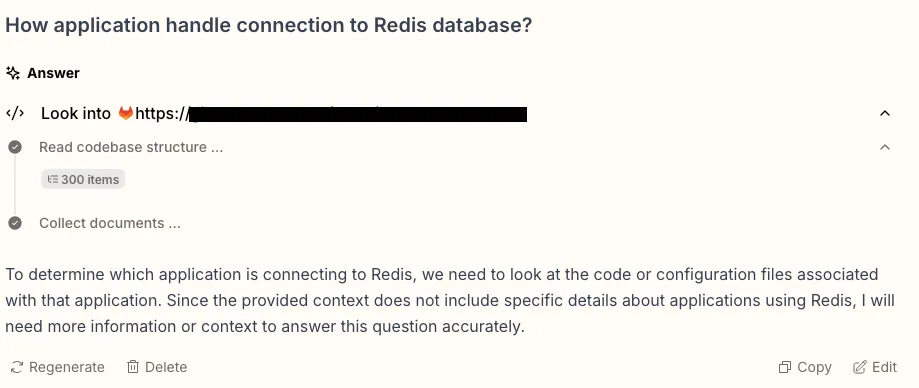

Example:

It read 300 items from code base and still asks for more information.

2. The Performance Baseline (7B Parameter Model)

7B or better 20B models seems are bare minimum for a code suggestion, but they come with much higher support costs estimated in $1000+ a month for infrastructure only.

What make it worse that tools like Tabby set a limit on a number of “Free” users. After 5 users please be nice and subscribe for $20/month per user.

🚀 Closing the Quality Gap with new standards

Proprietary models like ChatGPT, Gemini, and Claude produce superior results because of their advanced reasoning, but their ability to use your specific, private code is now often managed via a local context or new standards like MCP, A2A and recently announced Cloude Skills.

Claude Skills (Anthropic)

- What it is: A feature that allows developers to provide Claude with a self-contained unit of specialized logic, often in the form of a Python script, along with specific instructions.

- Some see it as a lighter-weight or more context-efficient alternative to using a full MCP server for certain functions.

Model Context Protocol (MCP)

- What it is: An open protocol originally created by Anthropic that standardizes the way an LLM-powered application connects to and uses external capabilities like APIs, databases, and file systems. It formalizes the concepts of Tools (functions the model can invoke) and Resources (data the model can read).

Agent-to-Agent Protocol (A2A)

- What it is: An open protocol focused entirely on interoperability between autonomous AI agents. It provides a standard for agents to discover each other’s capabilities (via Agent Cards), securely delegate complex work (Tasks), and reliably exchange structured results (Artifacts).

Conclusion

Proprietary models and new protocols to manage the complex RAG/MCP pipeline through a reliable API often provides superior quality and better cost control.

However in tight environments with a dedicated MLOps team running self-hosted version seems much more available than ever.