What is Top-K problem?

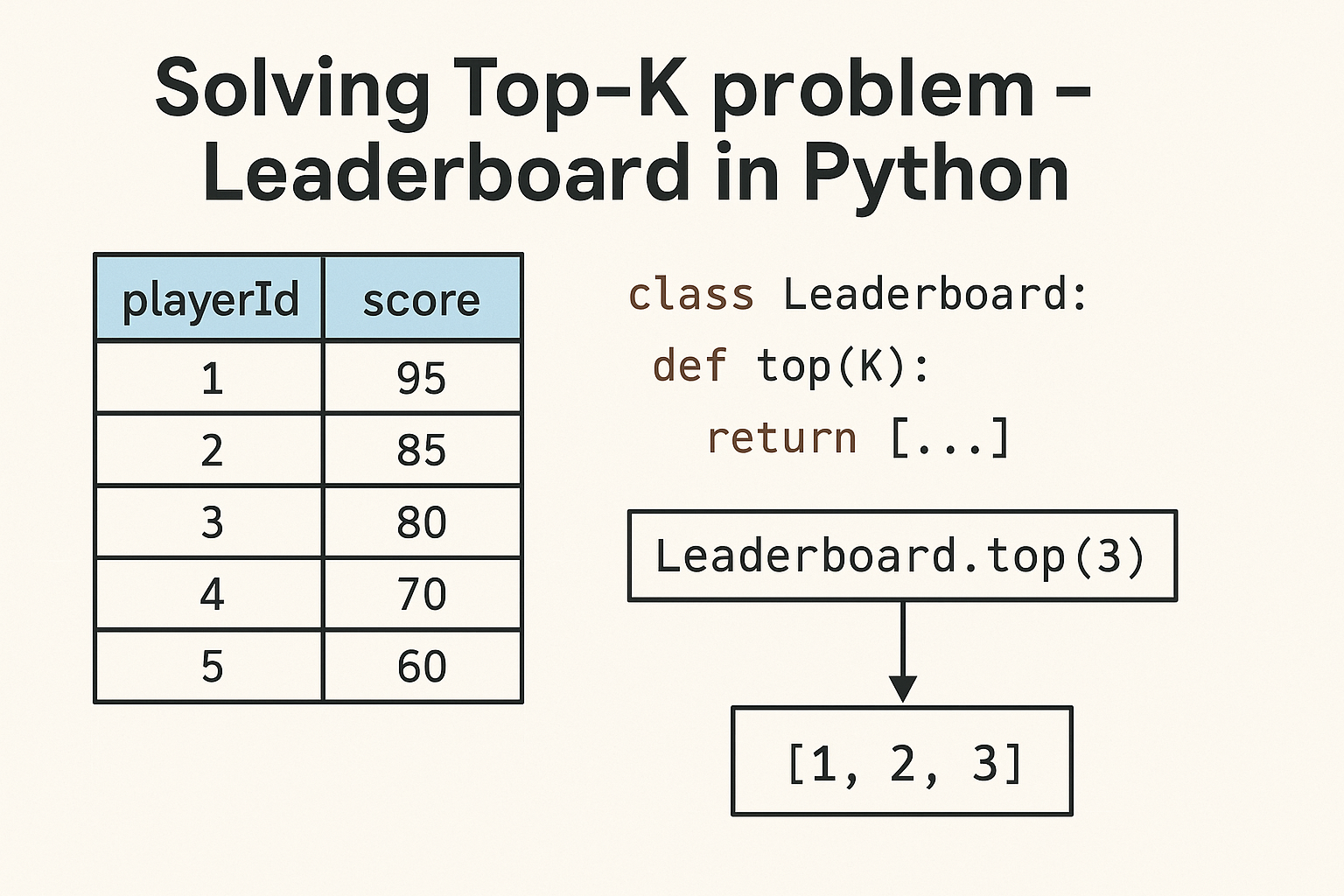

Think about Real-time Gaming Leaderboard with a rank system. Determining the rank of a player is a problem we are trying to solve.

This is implementation of Real-time Leaderboard solution from the System Design interview book.

Check out full source code at Leaderboard – solving Top K Problem with Python Github project.

Leader board requirements

- Get top 10 ranked players

- Show a current rank for each player

- Show a slice of players around specific rank

Solution 1: using SQL database

Direct approach is to use SQL kind of database and count rank in real time using count until Player score points >= all other players. This solution fall short as soon as we need to scale it for high number of players.

Solution 2: using Valkey/Redis sorted set

Valkey sorted set is a special engine type which keeps all records sorted according to a “score” which was made for exactly this kind of problem and it’s what we are going to use.

Implementation

Top-k-probelm(Leaderboard) project is built using FastAPI, SQLModel and Valkey.

API Design

API design was borrowed from the System Design Interview book. According to requirements it covers CRUD operations and getting rank operation.

1. Create Score

POST /scores

{

"name": "AwesomeGamer"

}

2. Update Score

PUT /scores

{

"id": 1,

"points": 10

}

3. Get TOP Scores

GET /scores

4. Get user rank

GET /scores/id

Players data model and API: SQLModel + FastAPI

I am using SQLite table to store user profile and current score of a player. Several SQLModel classes helps to customize API endpoints.

class ScoreBase(SQLModel):

name: str | None = Field(default=None)

class Score(ScoreBase, table=True):

id: int | None = Field(default=None, primary_key=True)

points: int = Field(default=0)

rank: int = Field(default=0)

class ScoreCreate(ScoreBase):

pass

class ScorePublic(ScoreBase):

id: int

points: int

name: str

rank: int

And APIs using FastAPI parameters and response model

@app.post("/scores", response_model=ScorePublic)

def create_score(data: ScoreCreate, session: SessionDep):

@app.put("/scores", response_model=ScorePublic)

def update_scores(id: int, points: int, session: SessionDep):

@app.get("/scores", response_model=list[ScorePublic])

def get_top_scores(session: SessionDep,

offset: int = 0,

limit: Annotated[int, Query(le=10)] = 10):

@app.get("/scores/{id}", response_model=ScorePublic)

def get_score(id: int, session: SessionDep):Both libraries provides very flexible and powerful way to API design.

Rank solution based on Valkey sorted set

From Valkey documentation

A Sorted Set is a collection of unique strings (members) ordered by an associated score. When more than one string has the same score, the strings are ordered lexicographically. Some use cases for sorted sets include:

- Leaderboards. For example, you can use sorted sets to easily maintain ordered lists of the highest scores in a massive online game.

- Rate limiters. In particular, you can use a sorted set to build a sliding-window rate limiter to prevent excessive API requests.

ZREVRANGE helps to get top 10 players sorted in reverse order by their score for our leader board.

ZREVRANGE leaderboard 0, 10

ZRANK helps to get specific player rank.

ZRANK leaderboard idFinally, using ZRANK following by ZREVRANGE we can also provide a list of players who is near by to a specific player inside the rank.

ZRANGE leaderboard 77

# rank 255

ZREVRANGE leaderboard 255, 250

# 5 players before

ZREVRANGE leaderboard 260, 255

# 5 players after

Conclusions

- FastAPI and SQLModel provides great interfaces to design APIs with nice domain model integration

- Valkey soreted set is a built-in solution for Top-k problem

- It’s not Python native, but if you are looking for more leet code solution on Python then check out this awesome video