Hey folks,

just a month ago I have been involved in AWS project based on Lambda functions. In this article I will explain what I learned so far and how to create production Lambda AWS environment with best practices in mind.

I will start from top level and will explain everything you need to have basic infrastructure supporting your Lambda functions and other applications in your cloud.

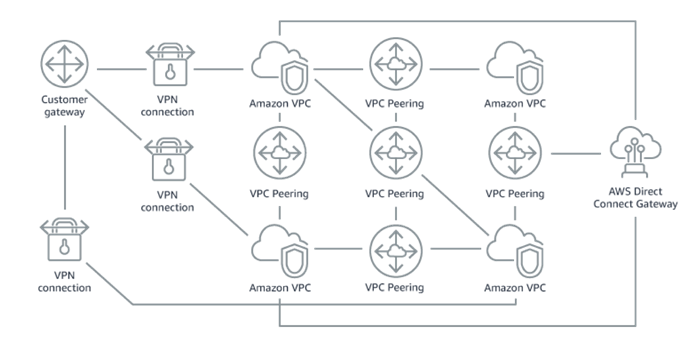

VPC

First, you need to created dedicated VPC and reserve range of IPs which doesn’t conflict with your other networks in case you would need to pair them together. As a general rule you should never use default VPC for production needs.

Create a security group which only allow 80 and 443 incoming traffic.

Subnets

You would need at least 4 subnets, two private and two public. Each type of subnet have to split in at least two different availability zones.

Public subnet have to contain AWS services endpoints and your servers which needs to have direct connection to internet like ELB, API gateway endpoints or bastion host (your ssh jump server).

Private subnet have to contain all your infrastructure servers like web servers, database server or backend applications.

Note that You should never place your infrastructure servers in public subnets.

Internet gateway and NAT

To function properly your VPC have to be attached to internet gateway and your private subnet should have NAT service enabled.

MySQL

For the database I use MySQL RDS. You need to disable public access to the instance and deploy it into private subnet. In security group add port 3306 for incoming connections and only from internal IP range. So, we have double protection here with security group and internal dns name for database.

There are a lot of best practices of how to setup production ready mysql instance, so I will skip most of it, but what you definitely need is to have read replica and shadow copy enabled. Make sure you set maintenance window which is right for you.

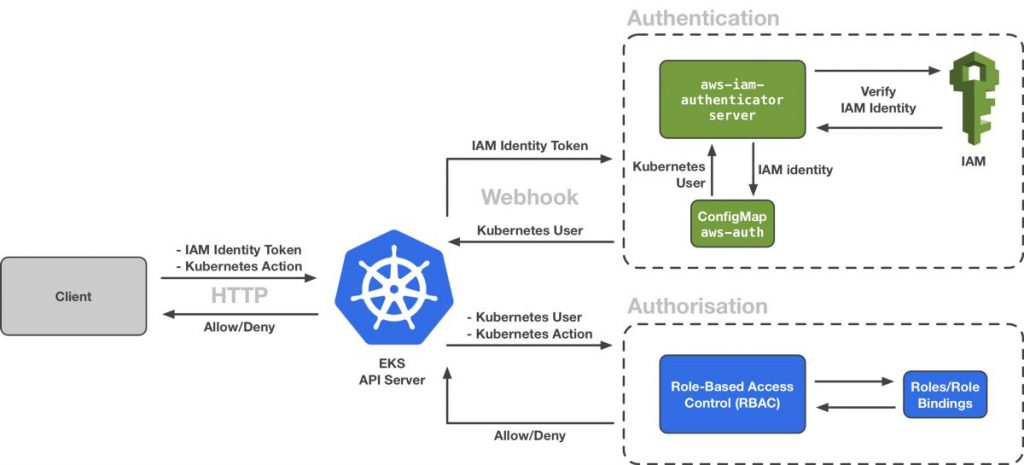

Lambda functions

To have access to our private database Lambda functions needs to be deployed inside the same VPC in private subnets. To setup https endpoints for lambda functions you would need to attach API gateway. In Lambda security groups add ports 80 and 443 for incoming connections.

That’s pretty much it, but very often you will have other web applications running in your vpc and to route traffic properly between Lambda and other apps you would need some web proxy like nginx.

Nginx

To have common entry point for your web applications and Lambda function Nginx is the best way to go. There is a new possibility to use ELB for that, but it isn’t good enough yet.

To have reliable and secure setup of nginx you would need to use common pattern of AWS which include: ELB, Autoscaling group, Launch configuration and security groups.

On the configuration side nginx will proxy traffic to Lambda functions through API gateway.

Elastic load balancer

Here you need to decide what kind of ELB suits your needs. I choose ELB with HTTPS support which provide SSL termination. In the ELB security group I added ports 80 and 443 for all incoming traffic.

Launch configuration

Within Launch configuration you need to define what kind of instance you want to launch when autoscaling is trigger in.

Autoscaling group

ASG define what is desired number of instance you want to run at any given moment. Using metrics such as CPU you can setup it to scale up or down to desired maximum or minimum number of instances.

Almost there!

Last step is to connect ELB with ASG and with Launch configuration!

Note I have skipped setting up of Target group and health checks, but they are pretty much basics.

That’s it!

Now you have a good start to develop with AWS Lambda in conjunction with general approach of web tier architecture.

What’s next?

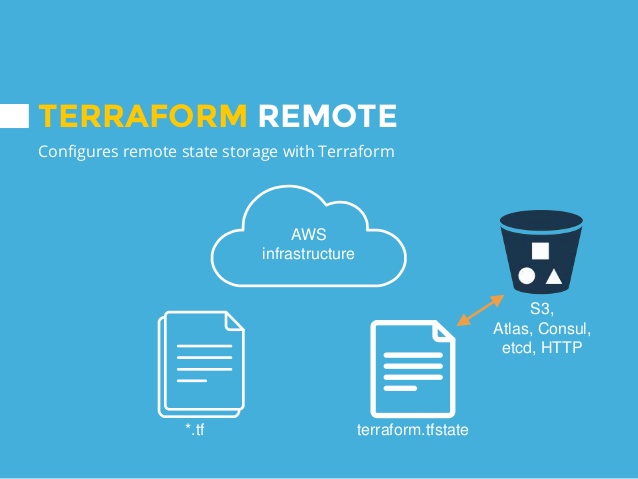

Second part of the topic is to setup CI and automation. Next time I will write how to code infrastructure with terraform, create nginx image with packer and run configuration management with ansible.