In the world of Site Reliability Engineering (SRE), few tools have sparked as much debate as Netflix’s Chaos Monkey. What started as an innovative approach to building resilient systems has become a lightning rod for controversy, dividing the engineering community into passionate camps. After years of watching teams struggle with its implementation, I’m convinced that the tool’s widespread misuse has created more problems than it solved.

The Promise vs. Reality

Netflix introduced Chaos Monkey in 2014 with a simple premise: randomly terminate instances in production to force teams to build more resilient systems. The idea was sound – if your system can’t handle the unexpected failure of a single component, it’s not truly robust. But somewhere between Netflix’s careful, strategic implementation and the broader industry adoption, something went wrong.

The evidence is everywhere. Reddit discussions in r/sre are filled with horror stories of cascading failures triggered by poorly timed Chaos Monkey deployments. HackerNews threads document teams spending more time recovering from simulated failures than actually improving their systems. As one frustrated developer put it: “Chaos Monkey causes chaos, it does not fix it.”

The Case Against Overuse

The critics’ arguments are compelling and backed by real-world evidence:

Reduced Developer Productivity: Constant, unpredictable deployments disrupt development workflows. Teams report spending excessive time rolling back changes and restoring services instead of building new features. When your developers are in perpetual recovery mode, innovation suffers.

False Sense of Security: Perhaps more dangerously, frequent disruptions create complacency. Teams become so accustomed to recovering from simulated failures that they lose focus on preventative measures. The perception of resilience becomes skewed – surviving artificial chaos doesn’t guarantee handling real-world incidents.

Operational Overhead Explosion: Every Chaos Monkey deployment requires investigation, analysis, and often rollback procedures. This consumes significant resources that could be better spent on proactive improvements and strategic technical debt reduction.

Misinterpretation of Failure Data: Controlled, short-lived interruptions bear little resemblance to sustained, complex outages. The data collected during Chaos Monkey tests cannot reliably inform long-term architectural decisions because the test environment fundamentally differs from genuine failure scenarios.

The Netflix Model: What Actually Works

Netflix’s original implementation wasn’t the blanket deployment strategy many teams have adopted. Their approach was surgical, strategic, and tied to specific maturity milestones. They deployed Chaos Monkey on reduced scales, focused on services that were already stable, and closely monitored the results.

The key difference? Netflix treated Chaos Monkey as a precision instrument, not a blunt force tool. Their engineers understood that the goal wasn’t to cause disruption – it was to observe system behavior under stress and identify specific weaknesses.

The Middle Ground: Disciplined Chaos

This doesn’t mean Chaos Monkey is inherently flawed. When used correctly, it can provide valuable insights. The critical factors for success include:

Service Maturity Requirements: Deploy only on stable, well-understood services. Using Chaos Monkey on immature systems is like stress-testing a house of cards – you’ll learn it falls down, but not much else.

Controlled Frequency: Deployments should be carefully scheduled and spaced, not constant background noise. Teams need time to implement improvements between tests.

Clear Success Metrics: Define specific learning objectives before each deployment. What exactly are you trying to discover or validate?

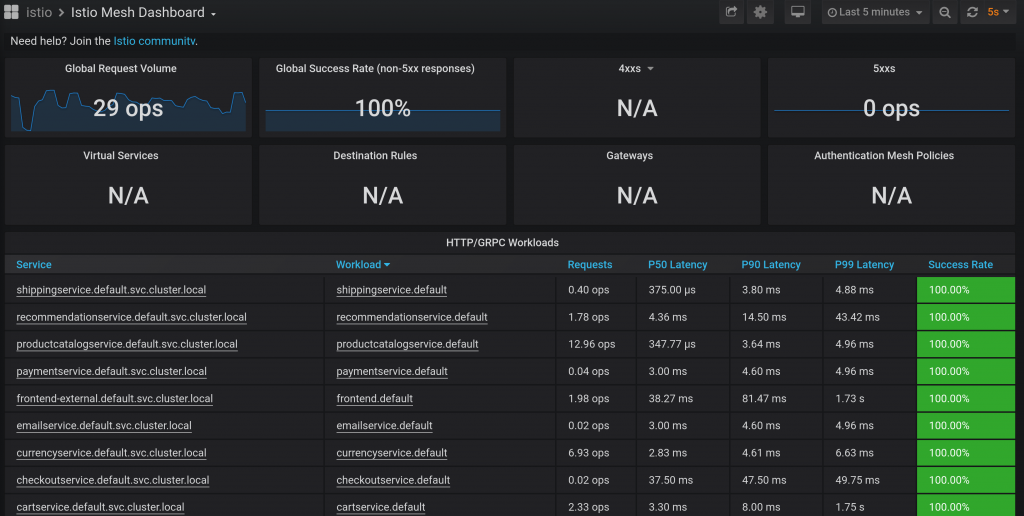

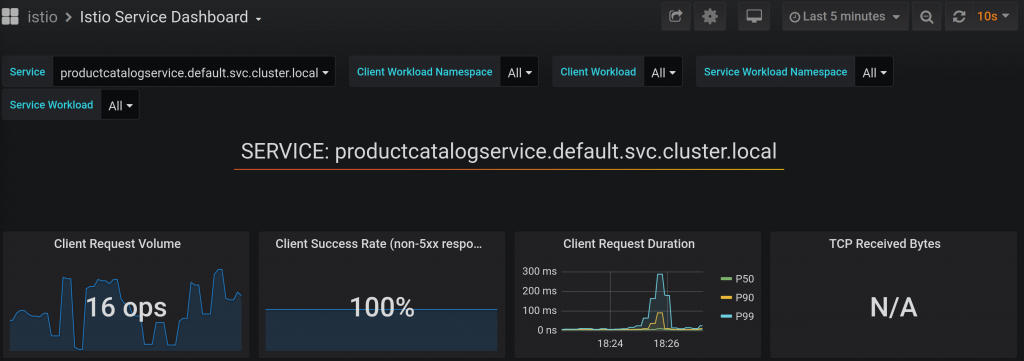

Comprehensive Monitoring: Ensure you can observe and analyze the complete impact of each test, not just surface-level metrics.

My Take: Strategy Over Chaos

After witnessing both spectacular failures and genuine successes with Chaos Monkey, I believe the tool’s value lies in its strategic application, not its frequency of use. The teams that succeed with chaos engineering treat it like any other engineering discipline – with careful planning, clear objectives, and disciplined execution.

The controversy surrounding Chaos Monkey ultimately reflects a broader issue in our industry: the tendency to adopt powerful tools without fully understanding their appropriate context and limitations. Netflix built Chaos Monkey for their specific needs, scale, and organizational maturity. The problems arise when teams apply it blindly without considering their own unique circumstances.

Looking Forward

The evolution toward tools like Chaos Gorilla and more sophisticated chaos engineering frameworks shows the industry is learning. We’re moving away from random disruption toward targeted, hypothesis-driven resilience testing. This represents a maturation of the chaos engineering discipline – from “break things and see what happens” to “systematically validate our assumptions about system behavior.”

The lesson isn’t to abandon chaos engineering, but to approach it with the same rigor we apply to any other engineering practice. Used strategically, tools like Chaos Monkey can strengthen systems. Used carelessly, they strengthen nothing but frustration levels.

The choice is ours: embrace disciplined chaos or remain victims of chaotic discipline.